Apple M1: Why It Matters

Ever since Apple launched its M1 processor and showed information technology running fast and absurd on new MacBooks, the tech customs has been abuzz testing the SoC and trying to depict comparisons to encounter where the M1 stands in terms of functioning and efficiency against Intel or AMD counterparts.

Needless to say, information technology's not a direct line you lot tin can describe when Intel and AMD run x86 applications, and M1 runs native Arm code and can besides translate x86. Some volition dismiss M1 efforts as beingness only for Apple devices (truthful), while others may see "magic" happening when Apple has been able to deliver a fast laptop that gets iPad-like battery life on their first attempt (also true).

In this article, nosotros'd similar to share a few of our thoughts on why Apple M1 is a very relevant development in the globe of estimator hardware. For us, this is akin to Intel joining the GPU wars in 2022. Information technology'due south merely the kind of thing that doesn't happen every mean solar day, or every year. And at present Apple tree has effectively entered the mainstream CPU market, to rival the likes of Intel, AMD and Qualcomm.

The transition

M1 marks a big architectural transition for the Mac since 2006, when Apple scrapped PowerPC in favor of Intel processors. Now the Cupertino giant is betting its unabridged future on Arm-based chips adult fully in-business firm, leaving Intel backside and condign more technologically self-sufficient.

The beginning devices powered by the Apple M1 include the MacBook Air, MacBook Pro 13 and Mac mini. This is relevant because the MacBook Air is their to the lowest degree expensive and nearly popular laptop. The Air is now also fanless.

Inside the MacBook Air: no fans. Prototype: iFixit

These first M1 computers are not performance-oriented models. Apple's breakup with Intel kickstarts a 2-year migration process, pregnant the entire Mac lineup (MacBook Pro, iMac, Mac Pro) volition motility to Arm-based custom silicon.

Leaving Intel behind

Intel has been struggling with manufacturing subsequently years of relentless advances. Apple saw this coming years alee and started working on its own desktop chip before really needing it. The vertical integration that Apple tree is achieving goes back to its roots and how it's always perceived computers.

The biggest benefits Apple tree will get from their switch to Arm is organization integration and efficiency. When they used Intel x86 before, they could just choose from a handful of offerings. Basically whatever Intel thought would be a good thought. If Apple tree wanted to tweak something like adding more GPU performance or removing unused parts of a processor, that wasn't possible before. Arm on the other manus is nearly infinitely customizable. What Arm creates are blueprints and minor pieces of intellectual holding. It's just like going to consume at a buffet where you tin can option and choose just the things yous want. This switch to Arm allows Apple'south engineers to design chips that perfectly fit their needs rather than having to settle for one of Intel's off-the-shelf chips.

Intel makes great CPUs, but nothing can lucifer the performance and efficiency of a fully-custom design. Apple was supposedly the "number one filer of bug in the [x86] architecture" according to one of their sometime engineers. Quality issues with Skylake finally pushed Apple tree over the edge to make up one's mind to just build their own CPUs. The decision will injure Intel'southward bottom line, just not that much. Apple only accounts for around 3% of Intel'south sales.

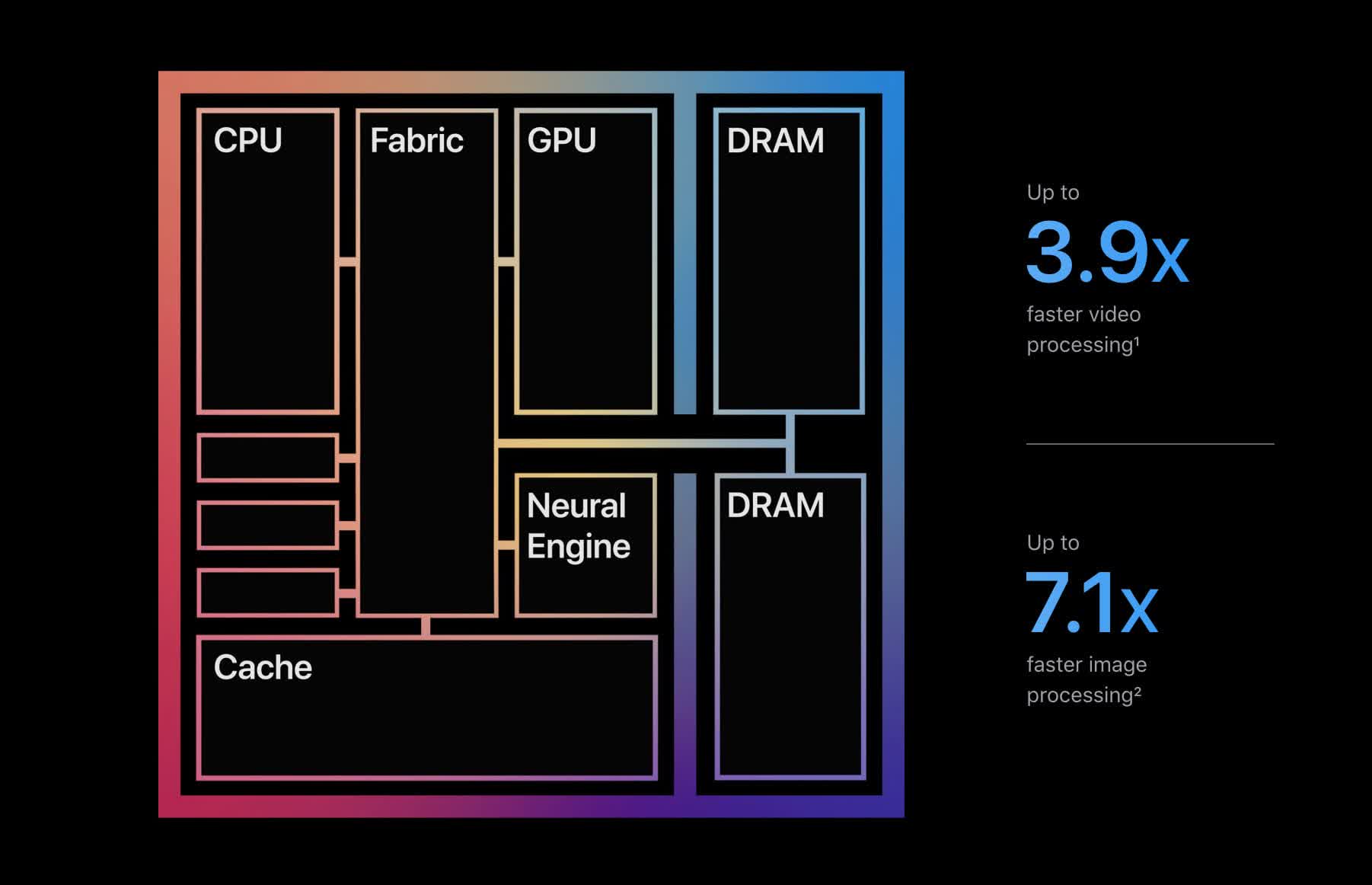

Not a CPU, an SoC

Not just is the initial M1 hardware capable. It is likewise very efficient. Plus, it does SoC stuff, so processing + graphics + IO + arrangement memory, all in the aforementioned package. Information technology'southward likely Apple tree had a lesser version of the M1 fix over a year ago, but they waited until they could leapfrog the rest of the industry in terms of operation per watt.

It'due south also articulate that Apple tree has leveraged its decade-long experience working on specialized hardware for the iPhone. By applying some of those principles into desktop hardware, it'southward brought on hardware-level optimizations to typical workloads which ways M1 can be extremely fast for some tasks including JavaScript, encoding/decoding, image processing, encryption, AI, (and very clever of Apple), even x86 emulation. This reminds united states of america of Intel MMX extensions of yesteryear, just on steroids.

Power and cooling has been a big limitation in how fast processors can go. You can just build a chip as fast equally you lot tin safely cool and power information technology. The preliminary operation and efficiency numbers for the M1 are where Apple deserves the most praise. Keep in mind that the M1 is essentially a beefed up iPhone A14, merely that's only the starting time. It tin can't compete with high end CPUs in functioning, simply it isn't trying to yet. This is the first generation of what will probable exist a long line of processors.

The M1's performance and energy efficiency compared to other low-ability CPUs is great and is the biggest do good of switching Macs over to Apple silicon.

Love and hype for Apple?

As tech enthusiasts, nosotros have nothing but adoration for the engineering teams at chip makers like Intel, AMD, Nvidia and Qualcomm. The fact that Apple tree has been able to join the fray, building a world-grade team capable of surpassing the likes of Qualcomm and other mobile makers offset, and now playing the aforementioned game every bit AMD and Intel is impressive.

— Figurer Clan (@thecomputerclan) Nov xx, 2022I haven't plugged in this M1 Mac in most 2 days. Information technology'due south merely half dead. lol. What is this sorcery? 🔋

Apple Silicon Macs are the future, human being. Competing laptops are gonna accept a hard time catching up. movie.twitter.com/FmX5uVKkFd

Or a not and so impressive view...

At the same time, this isn't necessarily as big of a bargain as the hype makes information technology seem. Apple didn't invent annihilation new or specially novel. To grossly over-simplify, what Apple tree has done is congenital a beefed up iPhone CPU and put it in a laptop. Recollect that Apple has been building iPhone SoCs in-house for over a decade, so they aren't exactly new to the game. That'due south not to say that Apple isn't worthy of praise for their accomplishments. To pull this off, they've gambled potentially billions of dollars in R&D on the promise that this switch volition exist benign in the long term.

What'south the deal with UMA?

Unified Retention Compages or UMA is ane surface area that has potential for Apple to greatly improve functioning and efficiency. UMA ways the CPU and GPU work together and share the same retentivity. On a traditional system, the RAM is used by the CPU and and then the graphics card will have its own defended video retention. Imagine you're trying to send your shell a message. The traditional approach to CPU and GPU memory is like you putting a letter in the mail service and waiting for it to be delivered to them. This approach is ho-hum since all messages have to become through the post office. To help brand this faster, a applied science called Direct Memory Access or DMA tin can be used where ane device tin directly access the retentiveness of another. This is like if they give you a key to their house and you just stop by to drop off the bulletin. Information technology's faster, only you still take to travel and get into their house. UMA is the equivalent of moving in and sharing the same house; there's no need to expect or travel anywhere to transport a message.

UMA is not bad for low-power applications where y'all want maximum integration to save on space and power consumption. However, it does have performance issues. In that location's a reason why high-end defended graphics cards are orders of magnitude faster than integrated graphics. You can but fit then much stuff on a scrap. There are other issues that arise with resource contention. If y'all're doing a very GPU intensive task that is using up lots of memory, you don't want information technology to choke out the CPU. Apple has washed an first-class task of managing this to ensure a resource hog in one area doesn't bring down the entire organization.

Not just hardware, but software

Moving macOS to Arm so seamlessly is no small feat. Nosotros know Microsoft has struggled with the same for years. So Apple ported macOS to Arm, all beginning political party apps, developed Rosetta translation for x86 compatibility, and worked on the developer tools that will ease the transition for all developers already invested in the Mac ecosystem.

Changing the scaled display resolution on the new #AppleM1 MacBook (left) is absolutely instantaneous 🔥 compared to the delay and screen blanking required by the Intel graphics on the 16 inch MacBook Pro (correct) picture.twitter.com/YybbPF09TF

— Daniel Eran Dilger (@DanielEran) Nov 20, 2022

Apple tree had been using Intel x86 CPUs in their Mac line of products since 2005. Before that they used PowerPC and Motorola fifty-fifty earlier. Each switch in architectures has a large list of pros and cons. The biggest problem with switching architectures is that all software must be recompiled.

Information technology's like the operating arrangement is speaking English while the processor speaks French. They take to match or nothing will work. It's like shooting fish in a barrel to do this statically for a few apps, but very hard to practise across an entire ecosystem. The benefits of switching architectures can include increased efficiency, lower cost, higher performance, and many more.

x86, Rosetta and compatibility

We said earlier that the switch to Arm ways Macs will speak some other language. Rosetta translates applications from x86 to Arm. Information technology can either perform this translation ahead of time when an application is installed or in real time while an application is running. This is no easy job considering the complexity and latency requirements.

The fact that Apple has even beaten out Intel hardware running the same code in certain circumstances deserves a large circular of adulation for the Rosetta team. It's not perfect though. Some programs run at 50% of their speed compared to native x86 hardware, and some merely don't work at all. That's non the end of the globe though. Rosetta is only intended to make the transition easier by offering a method to keep running x86 apps before developers have ported their code over to Arm.

Apple hasn't reinvented the bike with M1, but they have more or less started producing their own custom modified wheels. Intel and AMD volition still dominate the loftier performance CPU market for years to come up, but Apple isn't necessarily that far behind. You can't just crank this stuff out overnight, so it will have some fourth dimension.

PC gamers won't intendance

In the short to mid term, gamers, enthusiasts, and PC builders are entirely unaffected. It'southward going to take Apple one or two more release cycles to match the best you can buy on a desktop today, but fifty-fifty when/if they do, Apple's ecosystem is non the same place where gamers live. At the same fourth dimension, for every user that will buy just Apple, in that location'south at least i that will never buy Apple, likewise.

What were chip makers doing all this time?

Fairly typical question nosotros've seen asked in the past month: why hasn't AMD or Intel been doing this or that? How is information technology possible that all all of a sudden Apple tree has come up up with a novel way to integrate memory into the CPU and get more efficient?

Retrieve that if not for AMD, the desktop PC infinite would take been brackish this past half decade. But just similar AMD has been working hard on building the Zen architecture for desktop, workstation, and server workloads, Apple has been doing the same but building from a more than constrained, mobile scope.

Image: iFixit

There'due south still much to larn about how far beyond Apple can push M1, its successors, and UMA into building a more complex bit that can scale to have more cores and memory.

How the PC industry can do good

Engineers have been able to optimize software to run better on given hardware for a long time. Since Apple tree is now designing their own desktop processors, they can also optimize the hardware to run the software better.

That'due south a genuine threat to the Windows PC ecosystem, and staying behind is not an option. Thus, we wouldn't exist surprised if some of the key actors in that infinite: Microsoft, AMD, Intel, Nvidia, HP, Dell, Lenovo, etc., start working together to offering similar optimizations on hardware/software to brand PCs faster, better, or more efficient.

A prime number example of this are side by side-gen gaming consoles getting fast storage and I/O, thank you to tightly integrated hardware and software that allows for such an experience. Nvidia was cracking to announce RTX graphics cards could provide such a path to low latency and faster storage with RTX I/O, while a more direct equivalent to Xbox Series X's solution will be made available as a DirectX 12 feature chosen DirectStorage.

It's been characteristic of the hardware industry that when a new player or engineering science enters the market, it does so disrupting the status quo. Apple's M1 has washed but that.

Source: https://www.techspot.com/article/2167-apple-m1-why-it-matters/

Posted by: molinathares.blogspot.com

0 Response to "Apple M1: Why It Matters"

Post a Comment